Introduction to OpenMP

Contents

Introduction to OpenMP#

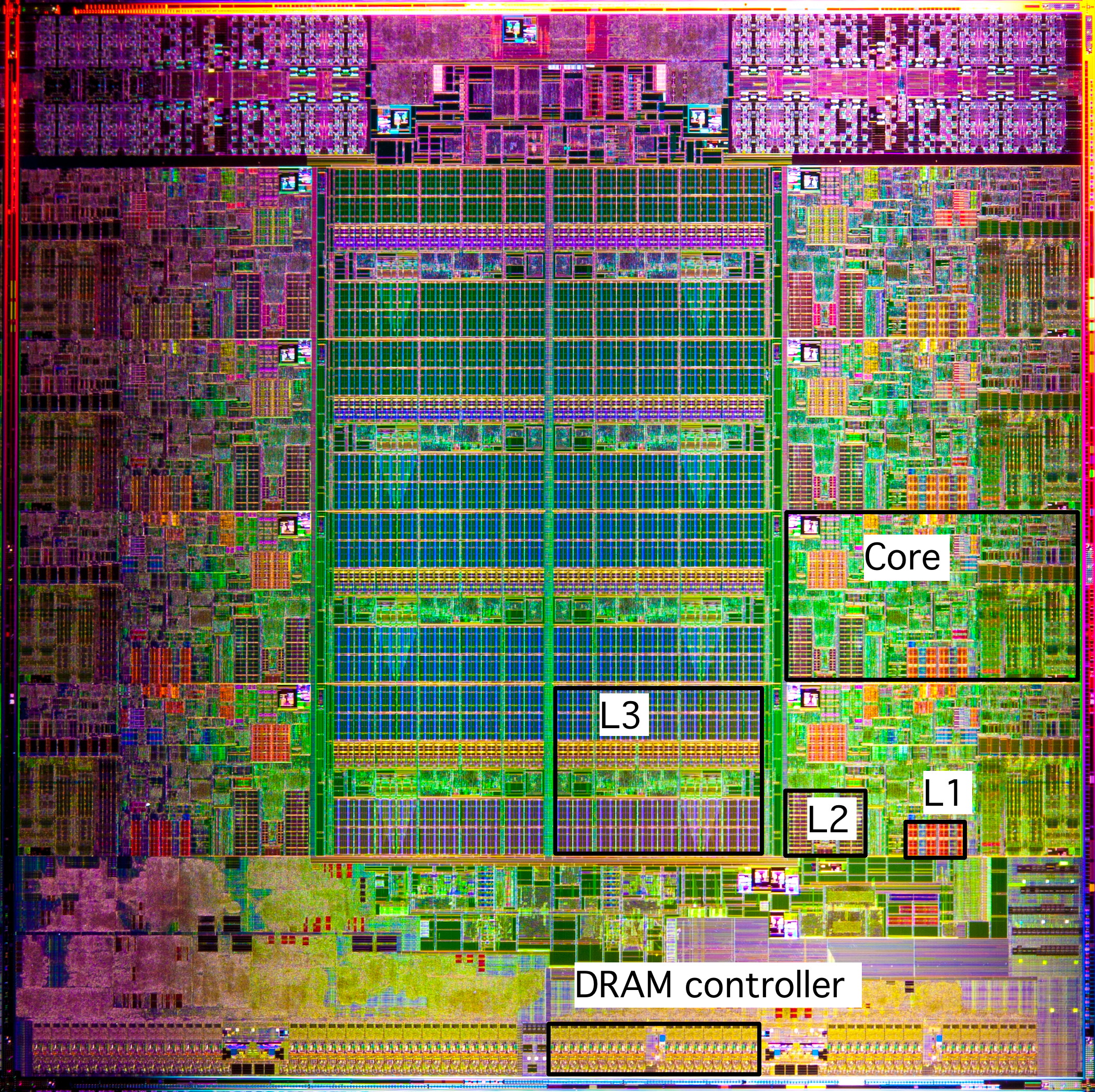

1. Target hardware#

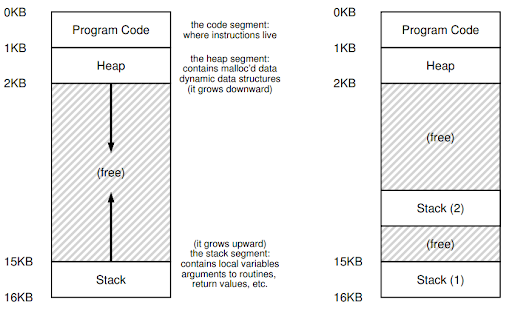

2. Thread#

An abstraction for running multiple processes

A normal process is a running program with a single point of execution, i.e, a single PC (program counter).

A multi-threaded program has multiple points of execution, i.e., multiple PCs.

Each thread is very much like a separate process, except for one difference:

All threads of the same process share the same address space and thus can access the same data.

POXIS threads (pthreads)

Standardized C language thread programming API.

pthreadsspecifies the interface of using threads, but not how threads are implemented in OS.Different implementations include:

kernel-level threads,

user-level threads, or

hybrid

Say hello to my little threads …

Launch a Code Server on Palmetto

Open the Terminal

Create a directory called

openmp, and change into that directory

$ cd

$ mkdir openmp

$ cd openmp

Create

thread_hello.cwith the following contents:

Compile and run

thread_hello.c:

$ gcc -o thread_hello thread_hello.c -lpthread

$ ./thread_hello 1

$ ./thread_hello 2

$ ./thread_hello 4

3. Target software#

Provide wrappers for

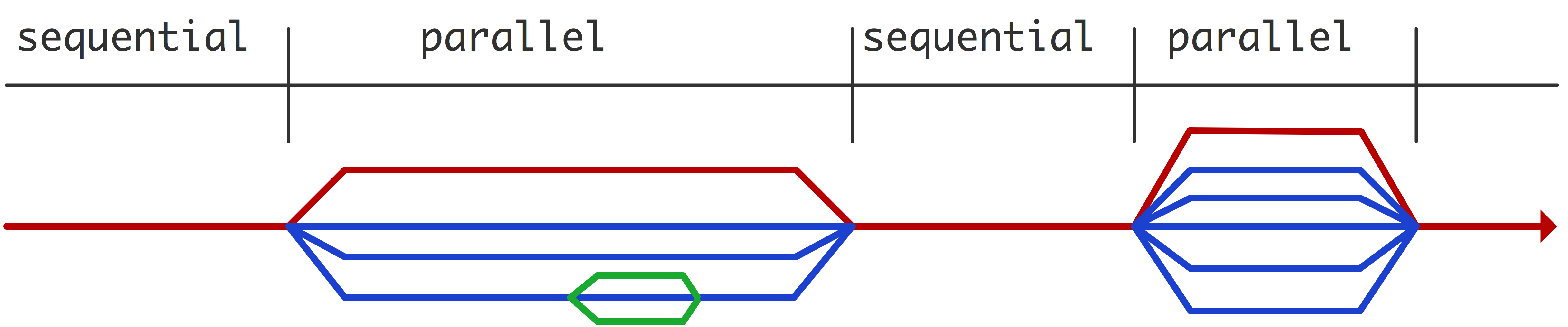

threadsandfork/joinmodel of parallelism.Program originally runs in sequential mode.

When parallelism is activated, multiple

threadsareforkedfrom the original proces/thread (masterthread).Once the parallel tasks are done,

threadsarejoinedback to the original process and return to sequential execution.

The threads have access to all data in the

masterthread. This isshareddata.The threads also have their own private memory stack.

4. Write, compile, and run an OpenMP program#

Basic requirements

Source code (C) needs to include

#include <omp.h>Compiling task need to have

-fopenmpflag.Specify the environment variable OMP_NUM_THREADS.

OMP directives

OpenMP must be told when to parallelize.

For C/C++,

pragmais used to annotate

#pragma omp somedirective clause(value, othervalue)

parallel statement;

or

#pragma omp somedirective clause(value, othervalue)

{

parallel statement 1;

parallel statement 2;

...

}

Hands-on 1: create hello_omp.c

In the EXPLORER window, right-click on

openmpand selectNew File.Type

hello_omp.cas the file name and hits Enter.Enter the following source code in the editor windows:

Line 1: Include

omp.hto have libraries that support OpenMP.Line 7: Declare the beginning of the

parallelregion. Pay attention to how the curly bracket is setup, comparing to the other curly brackets.Line 10:

omp_get_thread_numgets the ID assigned to the thread and then assign it to a variable namedtidof typeint.Line 15:

omp_get_num_threadsgets the value assigned toOMP_NUM_THREADSand return it to a variable namednthreadsof typeint.

What’s important?

tidandnthreads.They allow us to coordinate the parallel workloads.

Specify the environment variable OMP_NUM_THREADS.

$ export OMP_NUM_THREADS=4

{: .language-bash}

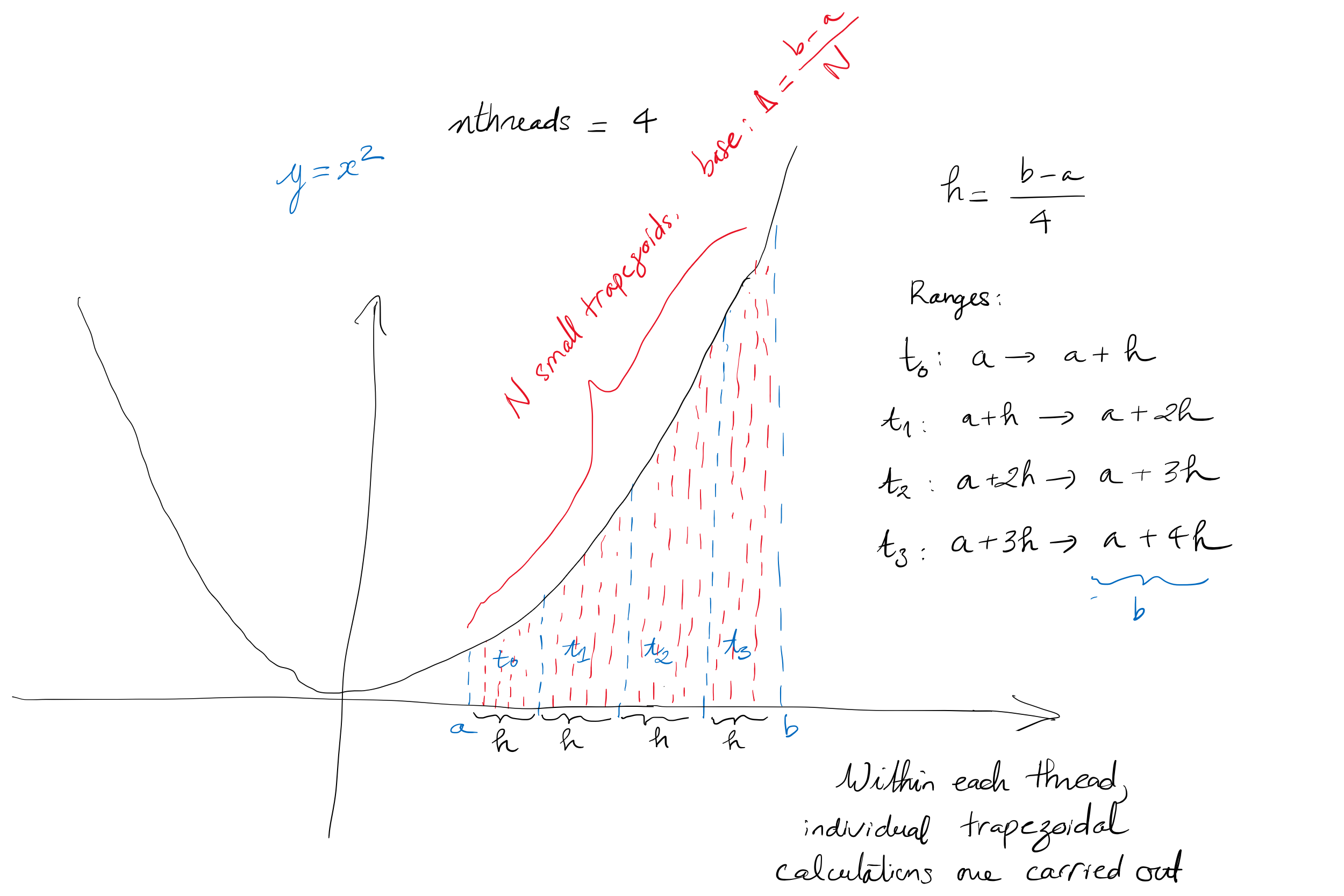

5. Trapezoid implementation#

In the EXPLORER window, right-click on

openmpand selectNew File.Type

trapezoid.cas the file name and hits Enter.Enter the following source code in the editor windows:

Compile and run

trapezoid.c.

6. A bit more detailed#

Modify the

trapezoid.cso that it looks like below.

7. Challenges#

Part 1

Alternate the trapezoid.c code so that the parallel region will

invokes a function to calculate the partial sum.

Solution

Part 2:

Write a program called

sum_series.cthat takes a single integerNas a command line argument and calculate the sum of the firstNnon-negative integers.Speed up the summation portion by using OpenMP.

Assume N is divisible by the number of threads.

Solution

Part 3:

Write a program called

sum_series_2.cthat takes a single integerNas a command line argument and calculate the sum of the firstNnon-negative integers.Speed up the summation portion by using OpenMP.

There is no assumtion that N is divisible by the number of threads.

Solution

8. With timing#

In the EXPLORER window, right-click on

openmpand selectNew File.Type

trapezoid_time.cas the file name and hits Enter.Enter the following source code in the editor windows (You can copy the contents of

trapezoid.cwith function from Challenge 1 as a starting point):Save the file when you are done:

How’s the run time?