MPI: pleasantly parallel and workload allocation

Contents

MPI: pleasantly parallel and workload allocation#

1. Definition#

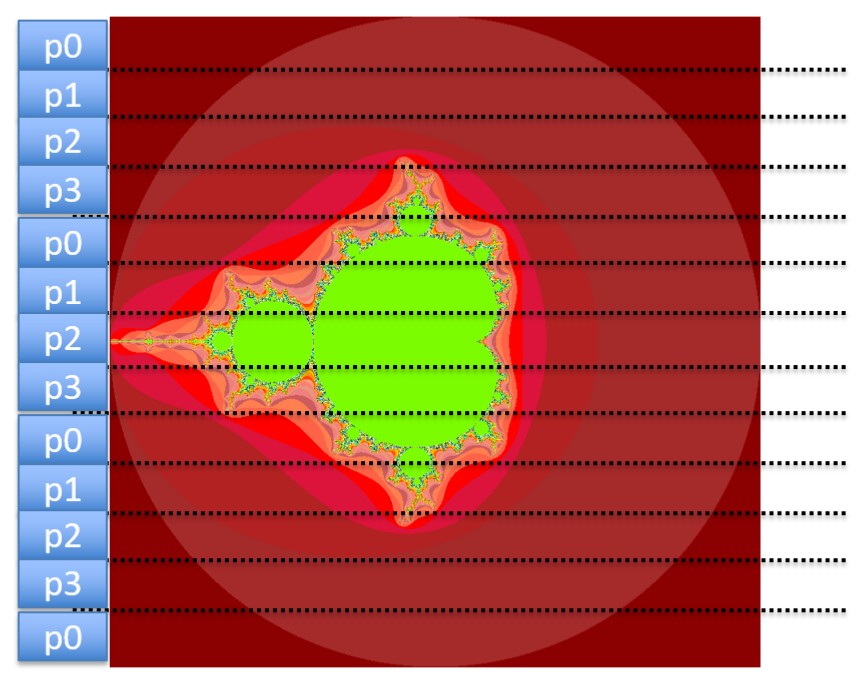

Embarrassingly/naturally/pleasantly parallel.

A computation that can obviously be divided into a number of completely different parts, each of which can be executed by a separate process.

Each process can do its tasks without any interaction with the other processes, therefore, …

No communication or very little communication among the processes.

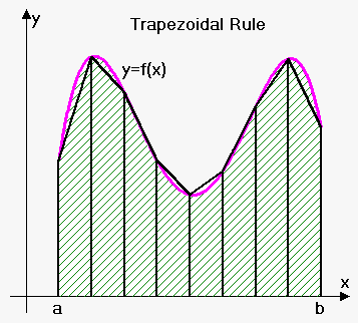

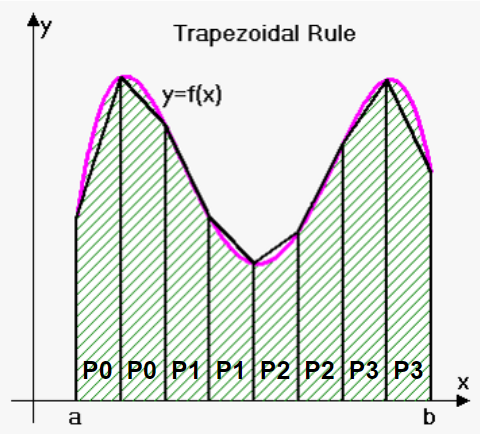

2. Example: integral estimation using the trapezoid method#

3. Example: integral estimation using the trapezoid method#

4. Example: integral estimation using the trapezoid method#

5. Example: integral estimation using the trapezoid method#

4 processes:

P0,P1,P2,P3:size= 4N= 8a= 0b= 2The height

hcan be calculated as:h= (b-a) /N= 0.25

The amount of trapezoid per process:

local_n=N/size= 2;

local_a: variable represent the starting point of the local interval for each process. Variablelocal_awill change as processes finish calculating one trapezoid and moving to another.local_aforP0= 0 = 0 + 0 * 2 * 0.25local_aforP1= 0.5 = 0 + 1 * 2 * 0.25local_aforP2= 1 = 0 + 2 * 2 * 0.25local_aforP2= 1.5 = 0 + 3 * 2 * 0.25

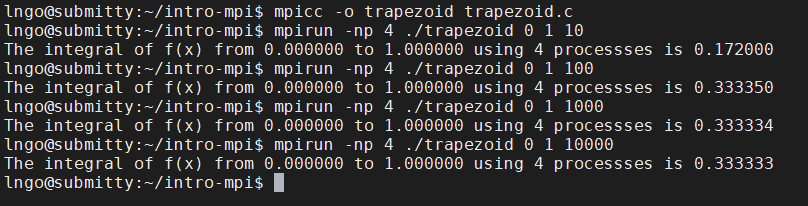

6. Handson: integral estimation using the trapezoid method#

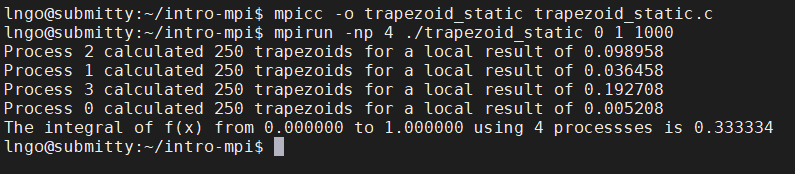

7. Hands-on: static workload assignment#

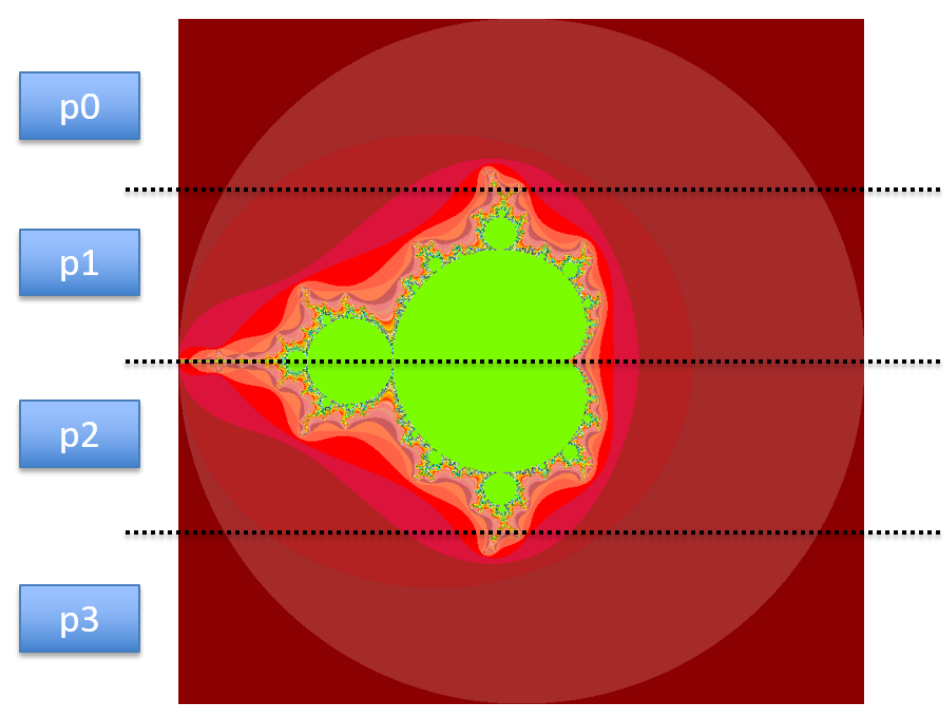

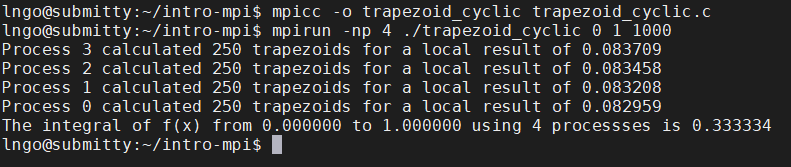

8. Hands-on: cyclic workload assignment#

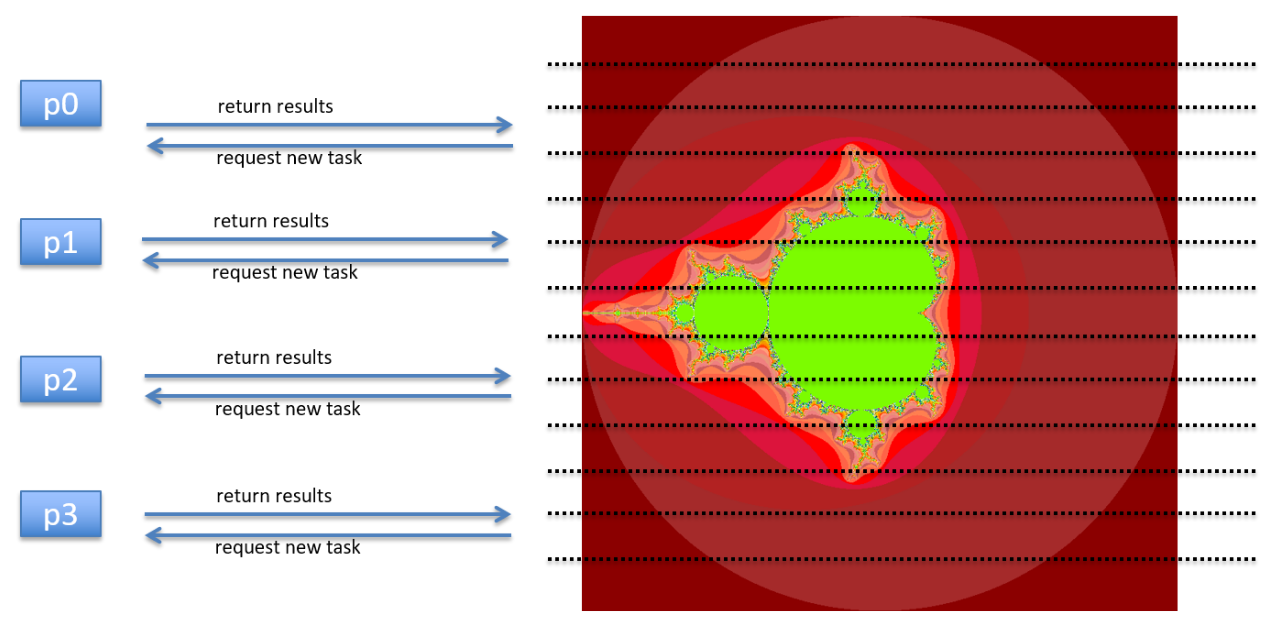

9. Hands-on: dynamic workload assignment#

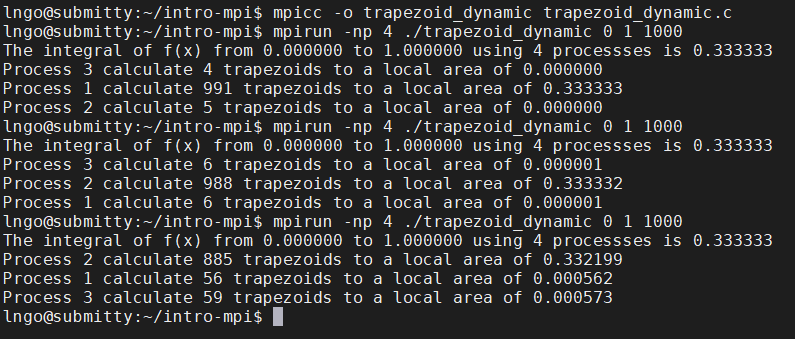

Create a file called

trapezoid_dynamic_.cwith the following contents:

Compile and run

trapezoid_dynamic.c:

$ mpicc -o trapezoid_dynamic trapezoid_dynamic.c

$ mpirun -np 4 ./trapezoid_dynamic 0 1 1000

$ mpirun -np 4 ./trapezoid_dynamic 0 1 1000

$ mpirun -np 4 ./trapezoid_dynamic 0 1 1000

{: .language-bash}

This is called

dynamic workload assignment.