MPI: point-to-point, data types, and communicators

Contents

MPI: point-to-point, data types, and communicators#

1. Overview of MPI communications#

Addresses in MPI

Data messages (objects) being sent/received (passed around) in MPI are referred to by their addresses:

Memory location to read from to send

Memory location to write to after receiving.

Parallel workflow

Individual processes rely on communication (message passing) to enforce workflow

Point-to-point communication:

MPI_Send,MPI_RecvCollective communication:

MPI_Broadcast,MPI_Scatter,MPI_Gather,MPI_Reduce,MPI_Barrier.

2. Point-to-point: MPI_Send and MPI_Recv#

MPI_Send

int MPI_Send(void *buf, int count, MPI_Datatype datatype, int dest, int tag, MPI_Comm comm)*buf: pointer to the address containing the data elements to be sent.count: how many data elements will be sent.MPI_Datatype:MPI_BYTE,MPI_PACKED,MPI_CHAR,MPI_SHORT,MPI_INT,MPI_LONG,MPI_FLOAT,MPI_DOUBLE,MPI_LONG_DOUBLE,MPI_UNSIGNED_CHAR, and other user-defined types.dest: rank of the process these data elements are sent to.tag: an integer identify the message. Programmer is responsible for managing tag.comm: communicator (typically just used MPI_COMM_WORLD)

MPI_Recv

int MPI_Recv(void *buf, int count, MPI_Datatype datatype, int source, int tag, MPI_Comm comm, MPI_Status *status)*buf: pointer to the address containing the data elements to be written to.count: how many data elements will be received.MPI_Datatype:MPI_BYTE,MPI_PACKED,MPI_CHAR,MPI_SHORT,MPI_INT,MPI_LONG,MPI_FLOAT,MPI_DOUBLE,MPI_LONG_DOUBLE,MPI_UNSIGNED_CHAR, and other user-defined types.dest: rank of the process from which the data elements to be received.tag: an integer identify the message. Programmer is responsible for managing tag.comm: communicator (typically just used MPI_COMM_WORLD)*status: pointer to an address containing a specialMPI_Statusstruct that carries additional information about the send/receive process.

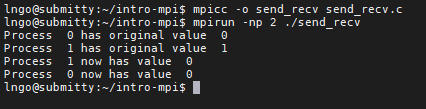

Hands-on: send_recv.c

We want to write a program called send_recv.c that allows two processes to exchange their ranks:

Process 0 receives 1 from process 1.

Process 1 receives 0 from process 0.

Inside

intro-mpi, create a file namedsend_recv.cwith the following contents

Compile and run

send_recv.c:

$ mpicc -o send_recv send_recv.c

$ mpirun -np 2 ./send_recv

Did we get what we want? Why?

Correction: separate sending and receiving buffers.

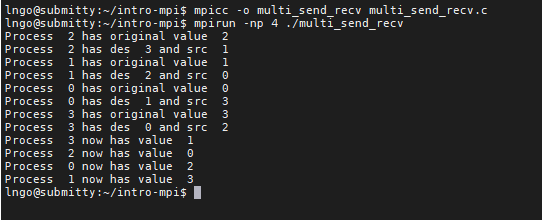

Hands-on: p2p communication at scale

Rely on rank and size and math.

We want to shift the data elements with only message passing among adjacent processes.

Inside

intro-mpi, create a file namedmulti_send_recv.cwith the following contents

Compile and run

multi_send_recv.c:

$ mpicc -o multi_send_recv multi_send_recv.c

$ mpirun -np 4 ./multi_send_recv

Did we get what we want? Why?

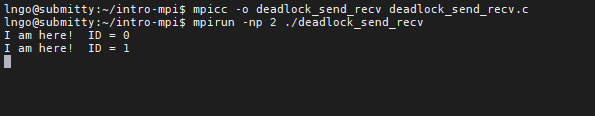

Hands-on: blocking risk

MPI_Recvis a blocking call.Meaning the process will stop until it receives the message.

What if the message never arrives?

Inside

intro-mpi, create a file nameddeadlock_send_recv.cwith the following contents

Compile and run

deadlock_send_recv.c:

$ mpicc -o deadlock_send_recv deadlock_send_recv.c

$ mpirun -np 2 ./deadlock_send_recv

What happens?

To get out of deadlock, press

Ctrl-C.

Correction:

Pay attention to send/receive pairs.

The numbers of

MPI_Sendmust always equal to the number ofMPI_Recv.MPI_Sendshould be called first (preferably).